It’s one of the hottest topics in go-to-market today: using AI and large language models (LLMs) for data enrichment. And it’s easy to see why.

The job of enriching GTM data is an intricate and highly valuable task that requires multiple, nested steps and dependencies, calling on a wide range of sources and integrations.

It’s the kind of work that AI applications appear tailor-made to tackle — a tempting proposition for enterprising engineers.

We’ve been helping businesses solve complex data challenges for nearly two decades. So naturally, we wanted to experiment with using LLMs for data enrichment as well.

Our team recently explored the potential of these tools for enrichment purposes with interesting results. Here’s what we did, and what we learned.

Identifying the Problem

Many of our customers are asking the same question: Can we rely solely on LLMs for data enrichment?

It’s a fair question. LLMs are becoming increasingly adept at tasks such as identifying mismatching information in large datasets. What this overlooks, however, is that for LLMs to enrich data to the standard required by today’s businesses, they need context — and this is where things can start to go wrong if you’re relying solely on an LLM.

LLMs can be a useful tool for data enrichment. They can help collect data and evaluate cases, but can struggle to find the right conclusions and actions to take, which is the other half of enrichment. This is because LLMs aren’t tuned — or, sometimes, even able — to prioritize the latest data, which is critical for enrichment; they may be able to gather data, but it may not be accurate. Without accurate data, it’s impossible to expect LLMs to make correct decisions.

With that in mind, we set out to see how LLMs could be combined with other tools to achieve more meaningful results.

Putting the LLM Through Its Paces

Our internal engineering team, led by Anne Fajkus, senior manager of data analysis, recently began exploring ways to combat a data problem our customers commonly encounter: junk CRM data, sometimes so bad that it’s difficult to even begin enriching properly.

Fajkus and the team wanted to develop ways of identifying erroneous records on a large scale. Upon closer examination, a potential solution began to emerge.

First, the team took a subset of data, including some of the most common fields we see in CRM data:

Company name

Website

Email domain of known contacts

They also included billing address, which is a useful datapoint for us at ZoomInfo.

Next, the team ran that sample dataset through a custom LLM agent. This gave the team a starting point, by identifying fields that did not match publicly available information.

The agent returned a dataset highlighting where values conflicted with available information, such as apparently incorrect names and addresses.

This is the point at which some companies might call the job done. What this doesn’t offer, however, is insight into why those datapoints are inaccurate, or how to go about reconciling them. We know that there's something wrong with the record, and using an LLM to gather data from, say, a website can highlight that. Part of the problem with this is the fact that not all website data is accurate. Even if it is, this doesn't help us get to the right action to take because it doesn't have the correct context to make that decision.

For that context, you need more information — so we turned to our Go-to-Market Intelligence Platform.

Diving Deeper with Match Insights

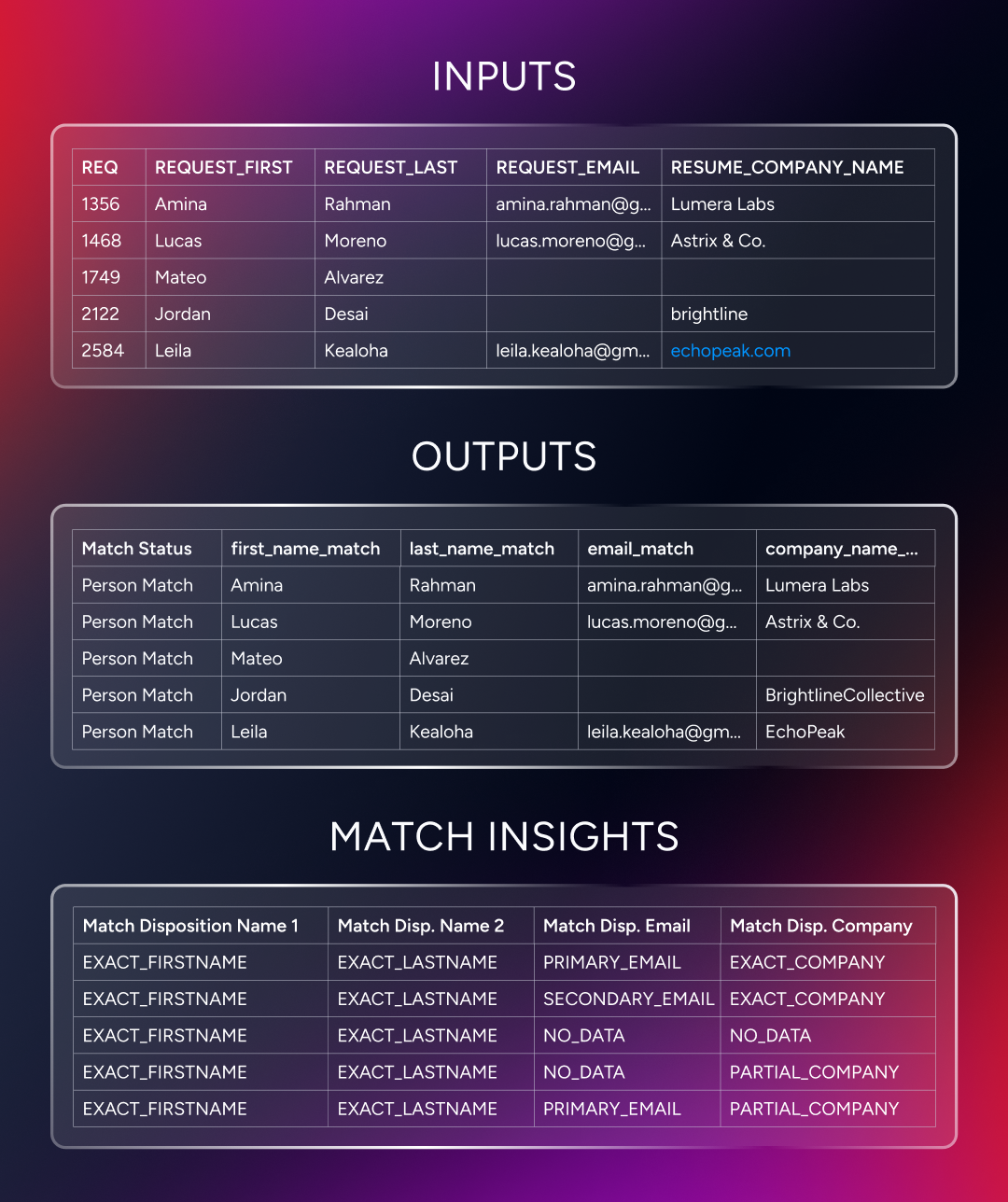

Our matching process includes a feature known as Match Insights, which provides our customers with greater clarity into how their CRM data aligns with ZoomInfo’s proprietary database when uploading lists of target accounts.

Match Insights gives our customers complete transparency into exactly why certain records matched, partially matched, or didn’t match at all.

This includes everything from obvious, significant discrepancies between an organization’s name and its primary website domain, or subtle differences such as conflicting address information. This helps our customers better understand and refine their data inputs for optimal accuracy and gives them greater confidence in their validation efforts.

When we ran our sample dataset through ZoomInfo’s Match Insights, it gave us a strong indication of where potential data decay was present, and served as a guidepost for refinement.

For example, some entities in our sample dataset matched on several fields, such as website, majority email domain, and billing address, but did not match on company name. Upon closer examination, that particular example revealed two distinct company names, both of which matched several other fields, including billing address.

Cross-referencing this with information in ZoomInfo’s Hierarchy tab, it became evident that the entity in question had actually rebranded from one name to another, which accounted for the partial matches to the other values.

Learning from History

Many companies realize the data in their CRM isn’t entirely trustworthy. The problem is what to do with that problematic data.

Should it be purged, or merged? Is a website domain conflict a rebrand, or a redirect? How do you go about determining why a hierarchical relationship changed?

Even the best LLMs need specific direction to return useful results, and those blind spots make even crafting suitable prompts for an LLM deceptively difficult. How do you know what to ask the LLM to do if you don’t know where to start?

ZoomInfo has been helping businesses go to market for almost 20 years. During that time, we’ve acquired many companies, each of which brought their own data that had to be integrated into our master databases. Beyond that, we have an extraordinary advantage in that we’ve been collecting historical data for the past two decades — not just from our acquisitions' books of business, but from our data pipelines and the work of our researchers throughout that time. The breadth of that historical data creates really robust profiles in the present, but also gives us a lot of context about the past. Differentiating what is currently accurate, versus was accurate at one point in time, is where LLMs can struggle.

Let’s take a look at how this plays out in practice.

If you prompt an LLM to find out who is on ZoomInfo’s executive team and how to contact them, much of the information returned is outdated and incorrect, for both ZoomInfo’s executive leadership and our board of directors. In addition to presenting outdated information on who these people are, many of the contact emails provided are also incorrect, following a formatting convention that only applies to Henry Schuck, our Chief Executive Officer.

This perfectly demonstrates how LLMs work. LLMs don’t actually know anything. They aren’t answering your question, they’re only providing the most likely sequence of words based on your question.

This is why even the latest LLM models get something as fundamental as ZoomInfo’s executive leadership team incorrect. If you examined ZoomInfo’s public filings over a several-year timeframe, many would name our former COO because he held that position for many years. To the LLM, it looks like the most likely answer. What’s worse is that this information is publicly accessible today. As a publicly traded company, our most recent regulatory filings include accurate information on our executive team and board of directors, which — in theory — is available to LLMs including ChatGPT.

Even in an exercise as limited in scope as this, the limitations of LLMs for data enrichment start to become readily apparent.

LLM Data Enrichment: Another Tool in the GTM Toolbox

Our experiments using an LLM for data enrichment revealed that these tools have significant potential to improve enrichment workflows at scale. Crucially, it also highlighted the importance of building those workflows on top of reliable, accurate data, especially when it comes to discerning the often-complex relationships between corporate entities as they change over time.

As with all data enrichment initiatives, context is vital for enriching data with LLMs.

If we didn’t have ZoomInfo data to contextualize our results, we would only have half the picture. Similarly, our experiments revealed the true scale of the technical lift required for projects of this magnitude. Even with ZoomInfo’s technical expertise and resources, automating data enrichment for millions of accounts, all of which are constantly changing, is beyond the capabilities of many businesses, even when relying on sophisticated LLMs.

Our experiment also had the considerable advantage of having the extensive work of ZoomInfo’s research team behind us. We evaluate all information in our Go-to-Market Intelligence Platform rigorously to ensure our customers have the most accurate data possible for their GTM efforts, using both automations and human research.

As a result, a lot of the legwork had been done for us by the time we were ready to begin analyzing the dataset.

It’s inevitable that LLMs will become an increasingly common part of data enrichment. It’s important to recognize, however, that LLM automations can only take you so far. Without a solid foundation of reliable information, AI automations can only highlight conflicts, not suggest how to resolve them.

We’re excited to continue experimenting and working with these technologies to improve how we cleanse and enrich customer data here at ZoomInfo, and we can’t wait to share the results with you.