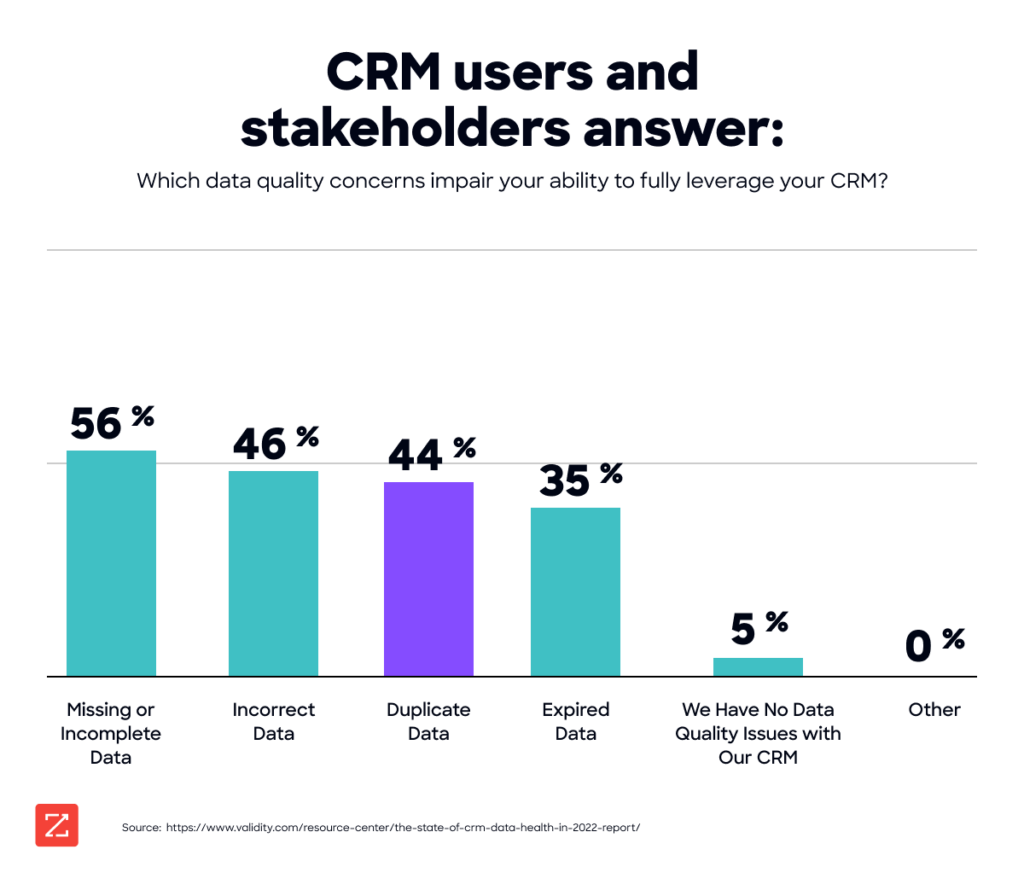

As long as humans are entering data into marketing forms, email signup lists, and CRM systems, duplicate data will never truly go away. And as the volume of data collection and processing continues to increase, the potential for duplicate data to cause havoc will only grow.

How much damage can it do? According to Gartner, poor-quality data costs companies some $13 million per year. Perhaps more troubling, the research firm reports that 60% of businesses don’t really know how much poor-quality data costs them because they don’t actually measure the impact.

Modern go-to-market teams deserve a modern solution to duplicate data. Here’s how data deduplication works, how deduplication drives results across departments, and how to use automation to dedupe and orchestrate your data at scale.

Data Deduplication: What it Means, Why it Matters

Data deduplication is the process of removing redundant information from databases and lists so that each entity or record exists only once. This may involve merging or removing extra records, as well as ensuring all information is correctly populated in each field of the record.

While the details of this work will vary based on your tools and processes, the general idea is to compare blocks of data, looking for matches. Metadata is then attached to any data identified as redundant, and the duplicates are placed in backup data storage. This metadata is important because it provides a way of tracking down any removed data, in case any of it needs to be reviewed or retrieved.

Then, indirect matches are flagged for a second review to determine whether the information can be combined into a single record.

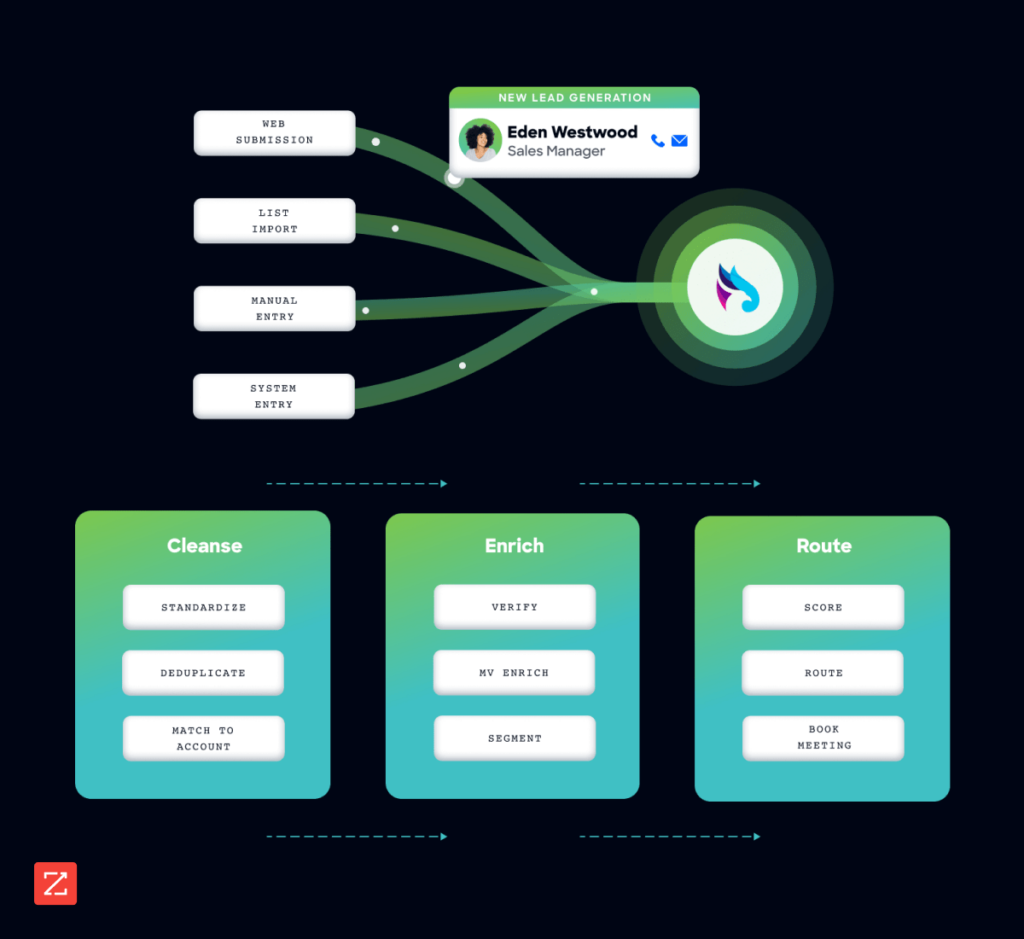

Data deduplication is one of the core components of go-to-market data orchestration — the practice of gathering, unifying, organizing, and storing data from various sources in a way that makes it easily accessible and ready for use by GTM teams.

The end-to-end data orchestration workflow includes the following steps:

The Hidden Cost of Duplicate Data

Duplicate records aren’t merely an IT inconvenience — they represent a fundamental business problem with far-reaching consequences.

When multiple versions of the same customer exist in your systems, your sales teams waste time pursuing already-engaged prospects, marketing campaigns deliver contradictory messages to the same recipient, and executives make decisions based on artificially inflated metrics.

According to IBM, poor data quality costs organizations in the U.S. $3.1 trillion annually, with duplicate data one of the key factors. Additionally, a study by Experian Data Quality found that businesses suspect that approximately 22% of their customer and prospect data is inaccurate, leading to wasted resources and missed opportunities.

This isn’t just inefficient — it’s unsustainable in a competitive marketplace where data accuracy provides critical competitive advantages.

Understanding the Root Causes: Data Introspection

Before implementing solutions, organizations must understand why duplicates manifest in their systems. This process of data introspection reveals four common reasons:

Definitional Ambiguity: Many organizations lack clear definitions of what constitutes a duplicate. Is duplication determined at the location level or account level? Without a designated owner of this definition, inconsistent interpretations proliferate across departments.

False Duplicates: What appears to be duplication may actually represent different aspects of a complex corporate relationship. Without proper company hierarchy structures in place, separate locations of the same enterprise might be misidentified as duplicates.

Logical or Permitted Duplicates: Some apparent duplicates exist by design due to legitimate business requirements — perhaps for compliance reasons or to accommodate special account rules. These exceptions must be recognized as intentional rather than problematic.

True Duplicates: These are genuine redundancies resulting from inadequate governance processes. Without “search before create” protocols or data enrichment mechanisms, users continuously generate new records instead of leveraging existing ones.

Building a Strategic Framework for Duplicate Management

Effective duplicate management requires more than occasional data cleansing projects. It demands a comprehensive approach integrating people, processes, and technology.

Discovery and Baseline Establishment

Transform anecdotal complaints about duplicates into concrete, actionable examples. This baseline assessment helps quantify the scope of duplication and distinguishes between necessary exceptions and problematic redundancies. Remember: de-duplication isn’t the ultimate goal — it’s the natural result of good data management practices.

Cluster and Organize

Bring order to data chaos by clustering your account information into logical groupings. Leverage corporate hierarchies to associate locations with their ultimate parent companies. This organizational structure helps identify which records represent distinct entities within the same corporate family versus actual duplicates requiring consolidation.

This clustering process serves a dual purpose: it immediately improves data usability while simultaneously establishing principles for ongoing governance. By analyzing these clusters, patterns emerge that inform your organization’s definition of duplication and appropriate management responses.

Leverage Third-Party Data Partners

External data providers offer valuable resources for duplicate management through enrichment services and unique identifiers. By matching your records against authoritative external databases, you can more confidently identify and merge duplicate entries into “golden records,” the most accurate and complete representation of each customer relationship.

Trusted data partners provide standardized information that helps normalize inconsistencies in how your organization captures company names, addresses, and other identifying information that often leads to duplication.

Implement Search-Before-Create Protocols

To stop the proliferation of duplicates at the source, establish mandatory search processes before new record creation. This simple yet effective practice ensures users first locate existing records before generating new ones. When bypassed, implement decision points requiring justification for creating apparent duplicates.

Data stewards play a crucial role in enforcing these protocols while balancing efficiency with compliance. Their oversight ensures adherence to established data governance principles without creating excessive friction in business processes.

Align with Business Requirements

Successful duplicate management requires understanding the business purpose behind each record. Different departments may have legitimate reasons for maintaining separate customer records, such as tracking distinct business relationships or complying with differing regulatory requirements.

By collaborating closely with business users, data teams can align management practices with operational needs, rather than imposing technically perfect but functionally problematic solutions. This collaborative approach builds organizational buy-in while ensuring data structures support rather than hinder business objectives.

Protect and Enrich Continuously

Maintaining data quality requires ongoing vigilance. Implement processes to continuously validate and enhance your customer information, keeping records updated and complete. Data orchestration tools, combined with third-party enrichment services, can automatically refresh information, reducing the likelihood that outdated records will prompt users to create duplicates.

This continuous enrichment approach shifts the paradigm from reactive cleanup to proactive quality management, dramatically reducing the resources required for data maintenance while improving accuracy.

Future-Proofing Your Data Strategy

Remember that deduplication itself isn’t the goal — it’s the natural outcome of effective data management aligned with business objectives.

As your organization evolves, your data governance must adapt accordingly:

Regularly revisit your duplication definitions as business relationships and structures change

Maintain active engagement between data teams and business units to ensure alignment

Measure and communicate the business impact of improved data quality, not just technical metrics

Incorporate duplicate prevention into your digital transformation initiatives rather than treating it as a separate challenge

Taking Action Now: Your Path Forward

The cost of inaction on duplicate data far exceeds the investment required to address it systematically. Organizations that successfully tackle this challenge gain significant advantages in operational efficiency, customer experience, and strategic decision-making.

To begin your journey toward duplicate mastery:

Conduct a rapid assessment of your current duplication rates and their business impact

Establish clear ownership of data quality within your organization

Develop consensus definitions of duplicates specific to your business context

Implement at least one preventative measure (like search-before-create) within the next 30 days

Create a roadmap for developing more sophisticated capabilities over time

The organizations that thrive in today’s data-centric business environment aren’t those with perfect data, but the ones that systematically identify, manage, and prevent the issues that undermine data integrity. By addressing duplicate records through comprehensive governance rather than periodic cleansing, you transform a persistent challenge into a sustainable competitive advantage.

Will your organization continue treating duplicates as a technical nuisance, or will you recognize them as a strategic opportunity to fundamentally enhance your business capabilities? The choice is yours — and the time to act is now.